meet musicBox

an interactive, multisensory experience

Role

concept devo

back-end devo

fabrication

tools

arduino ide

team

Soomin kim

timeline

sept 2018 - dec 2018

results

functional physical prototype, open house demo

special thanks

clarissa verish

orit shaer

amy banzaert

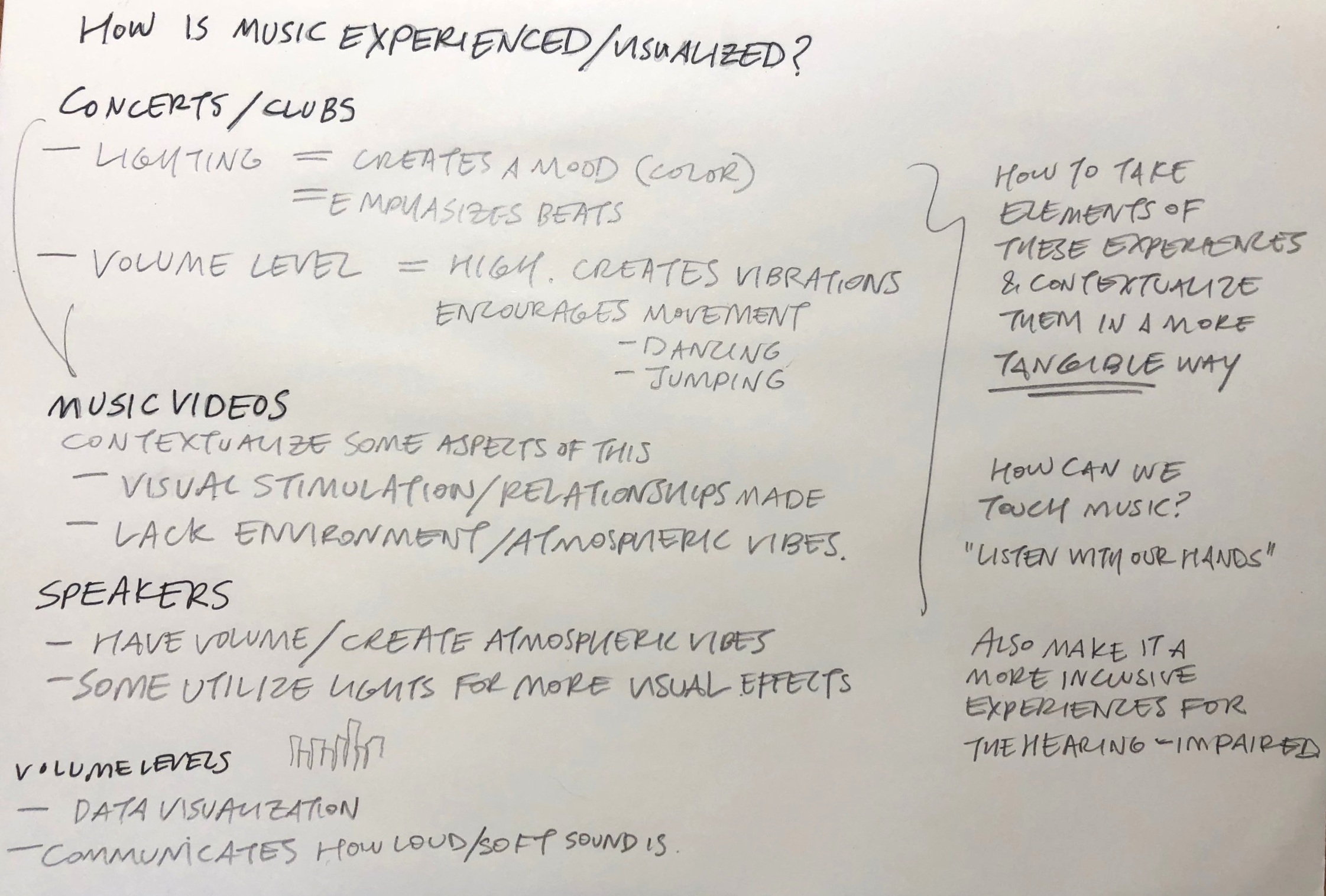

From September to December of 2018, my team for Tangible User Interfaces designed a novel interface called MusicBox, which sought to reframe music as a multi-sensory experience.

Our project brief was to “explore the possibilities of tangible interaction within the domain of music making, and develop a system that creates a meaningful and delightful musical experience for the users.” Our project intended to rethink music as not listening-centric, but rather an engaging, interactive experience that engages many of the other senses. This aspect also led to considerations of accessibility within the realm of music consumption.

Our 2.5 month process began with concept development, ideation and experimentation, and concluded with a fully functional physical prototype, which was demoed in an open house presentation.

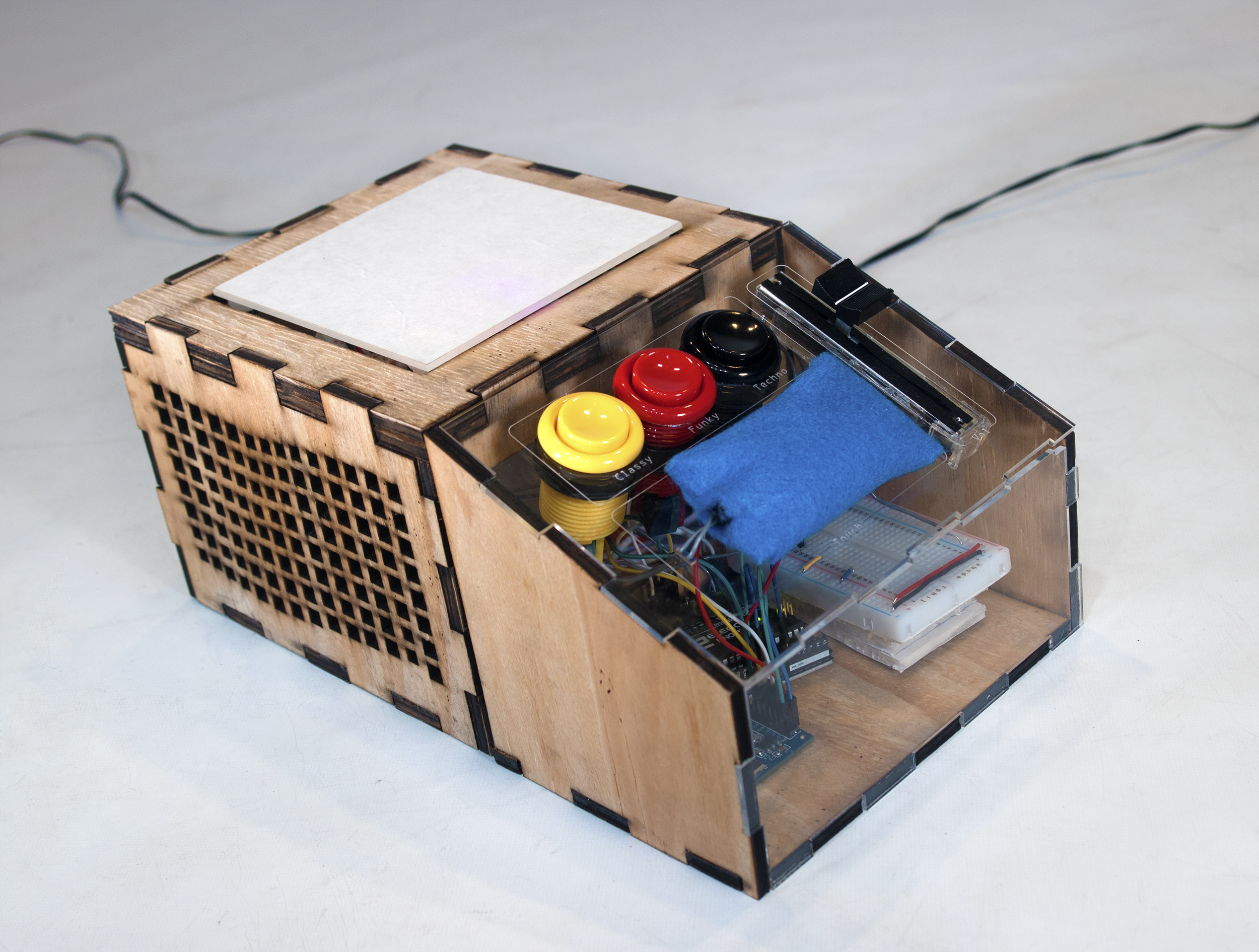

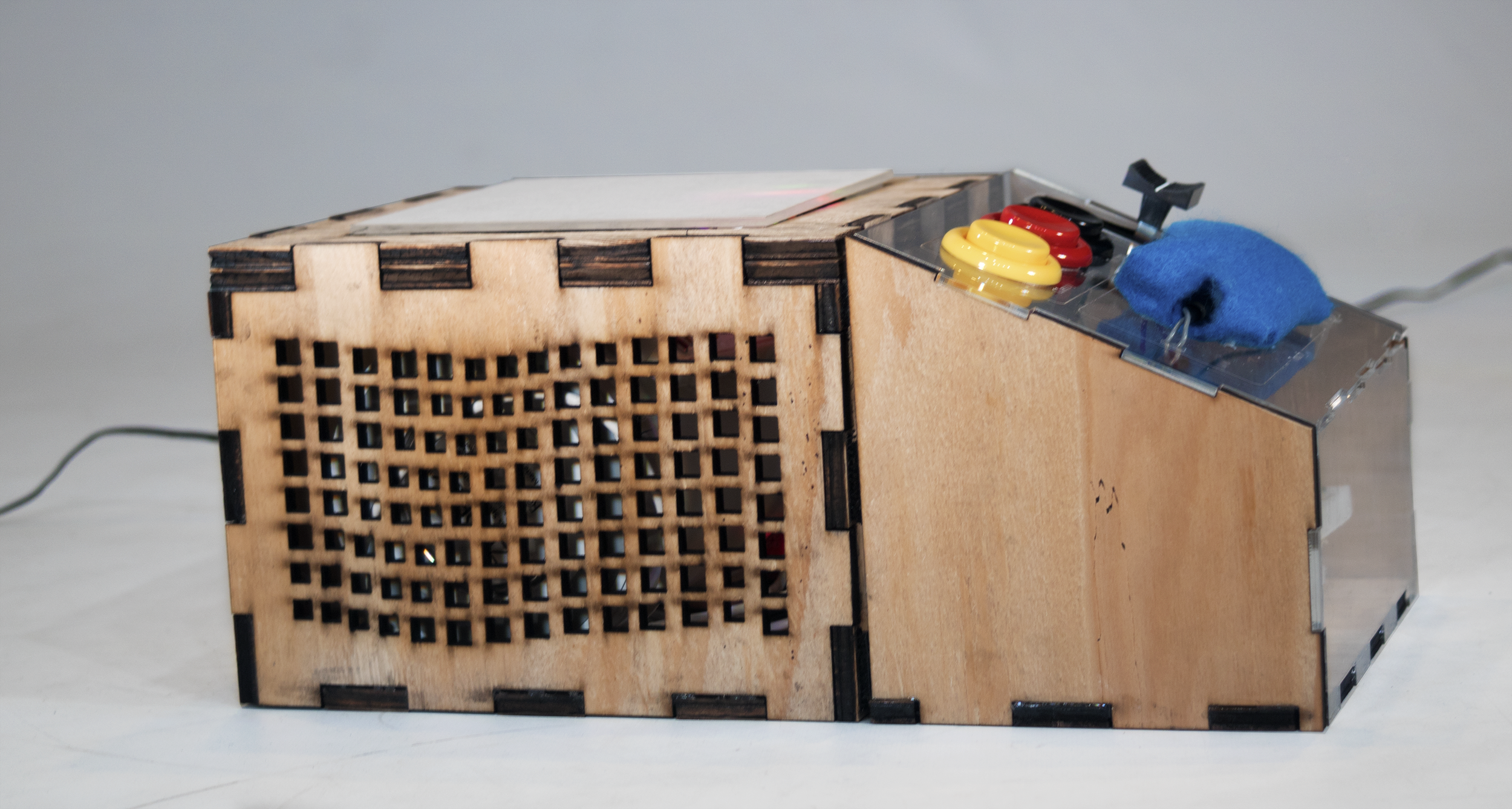

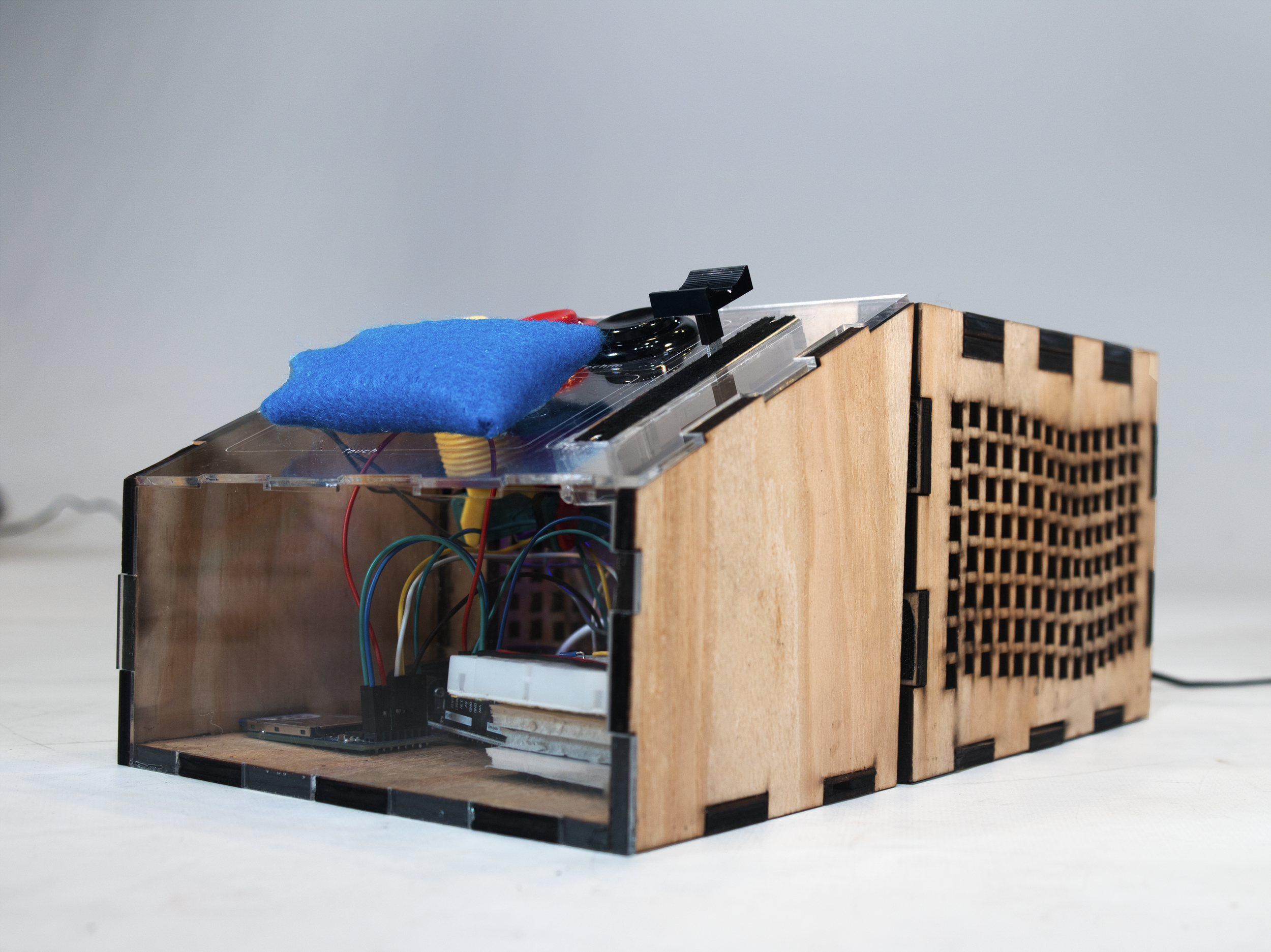

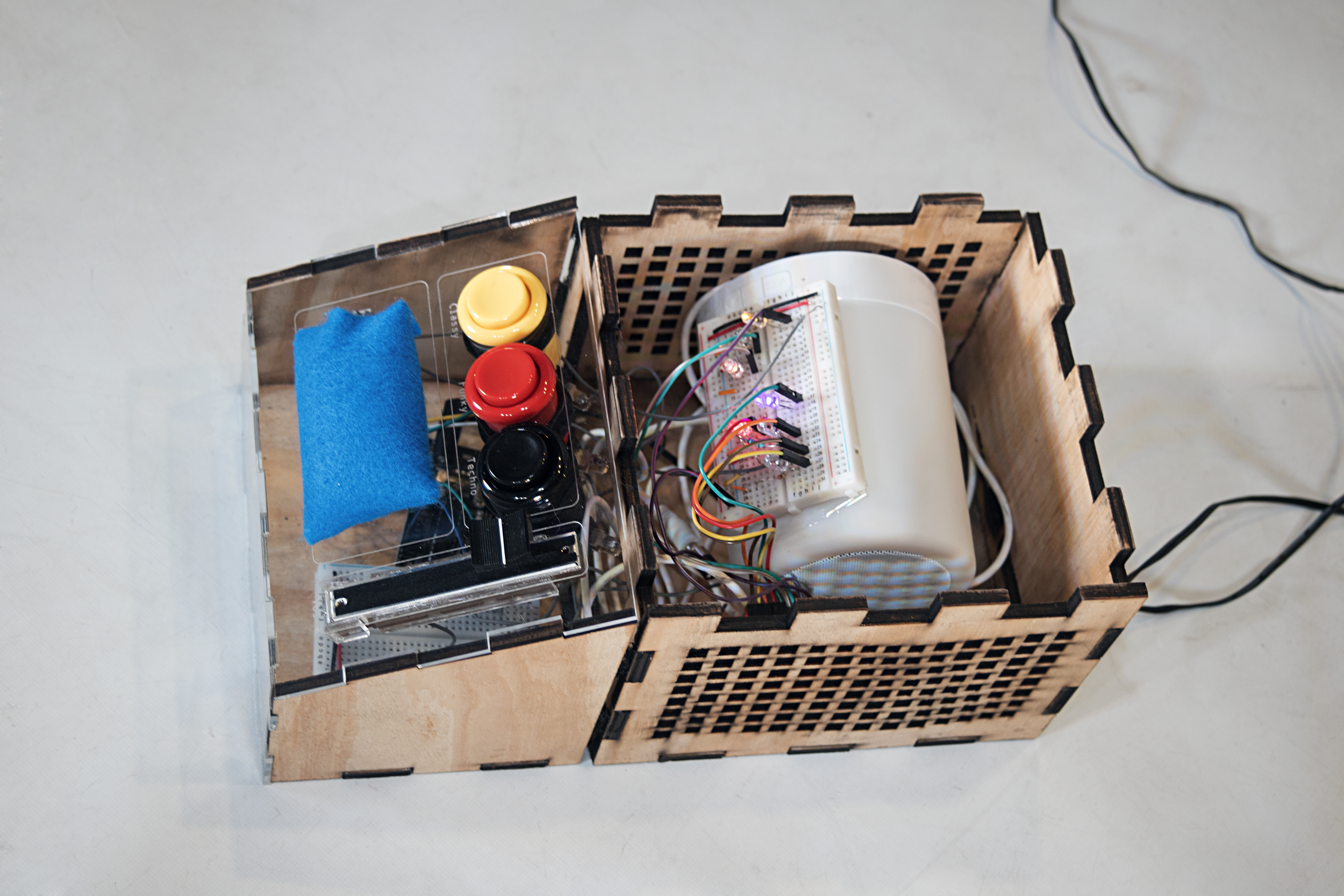

The project was physically fabricated with laser cut acrylic, laser cut wood, two Arduino Uno boards, the Sparkfun Audio Spectrum shield, a speaker, and several other electrical components. All code was written in the Arduino IDE, and can be found here.

final design

MusicBox is a new tangible user interface that reframes music as a multisensory experience, incorporating sight and touch along with sound.

Use the buttons to pick a mood, and adjust the volume to see how it affects the strength of sensory output. Watch colored lights react to different layers within the music, and feel the boom of the beat under the palm of your hand.

features

see

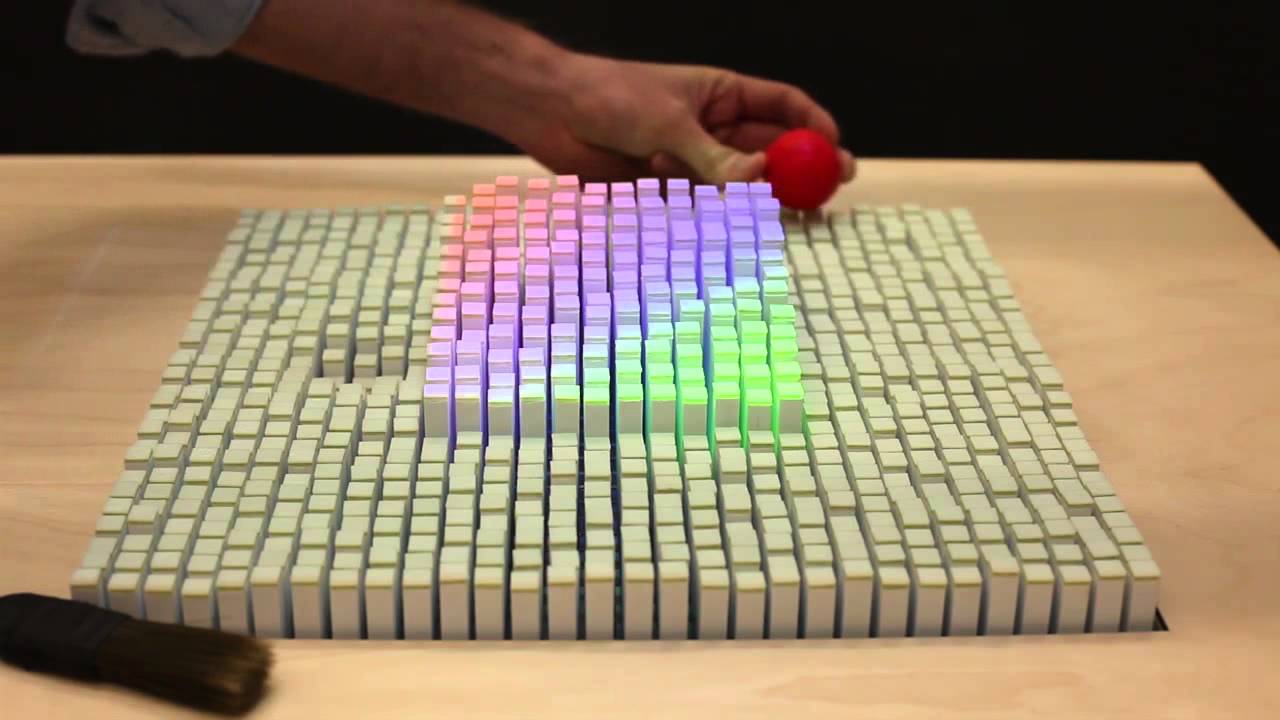

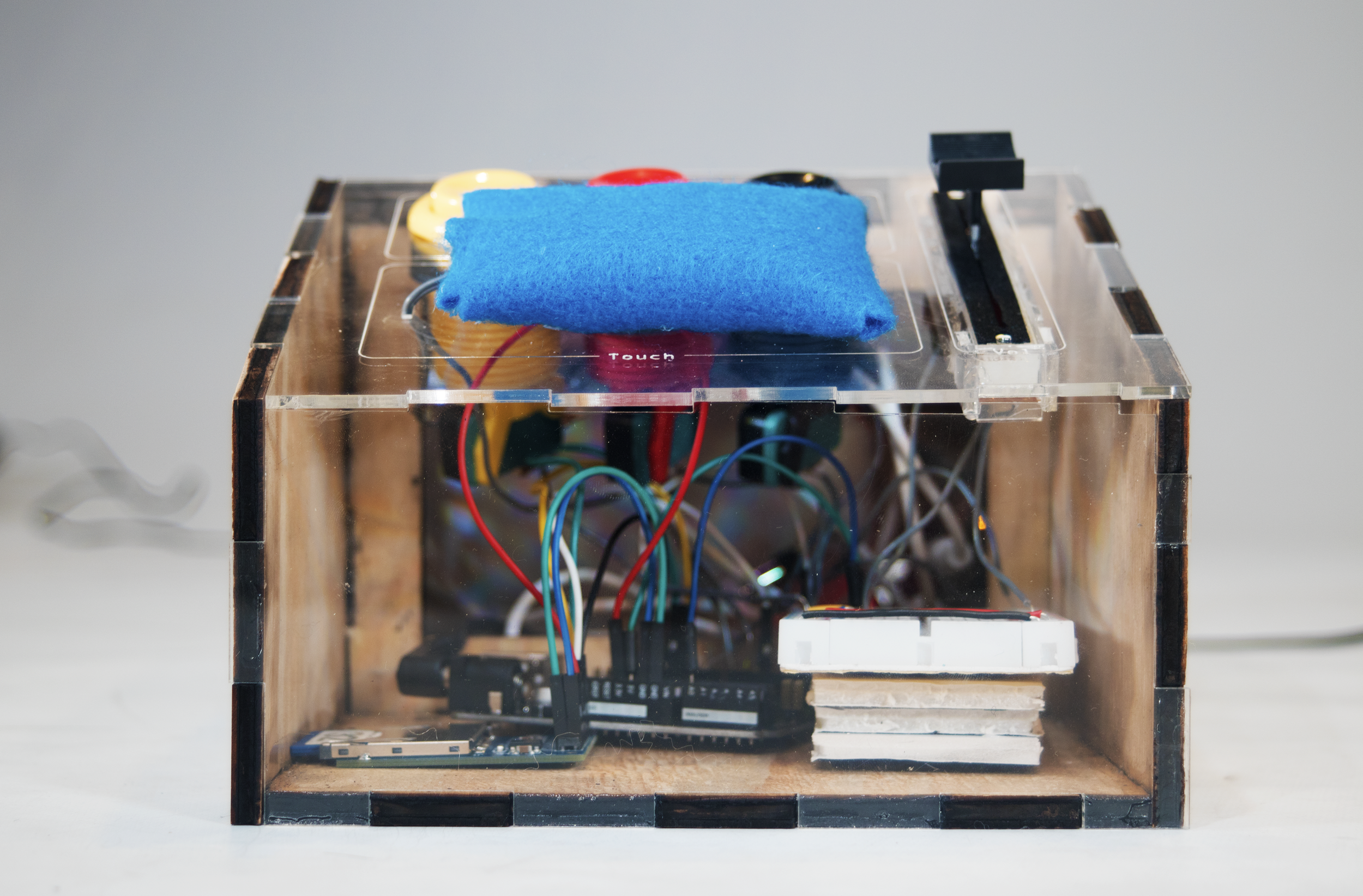

LED lights through the semi-transparent panel on the top dance along to the music - in the dark, it’s your own personal light show.

Each light is aligned to a different frequency in the music, so you’ll see everything from the bass to the drums to the electric guitar.

feel

A soft cushion vibrates under the palm of your hand to the beat and the bass.

Feel the intensity of the music when it’s heightened - a tactile version of concert speakers booming.

control

Three different buttons control the mood - choose between classy, funky, and techno.

A volume control slider also changes the effects of the output, from the brightness of the lights to the intensity of the vibration speaker.

explore

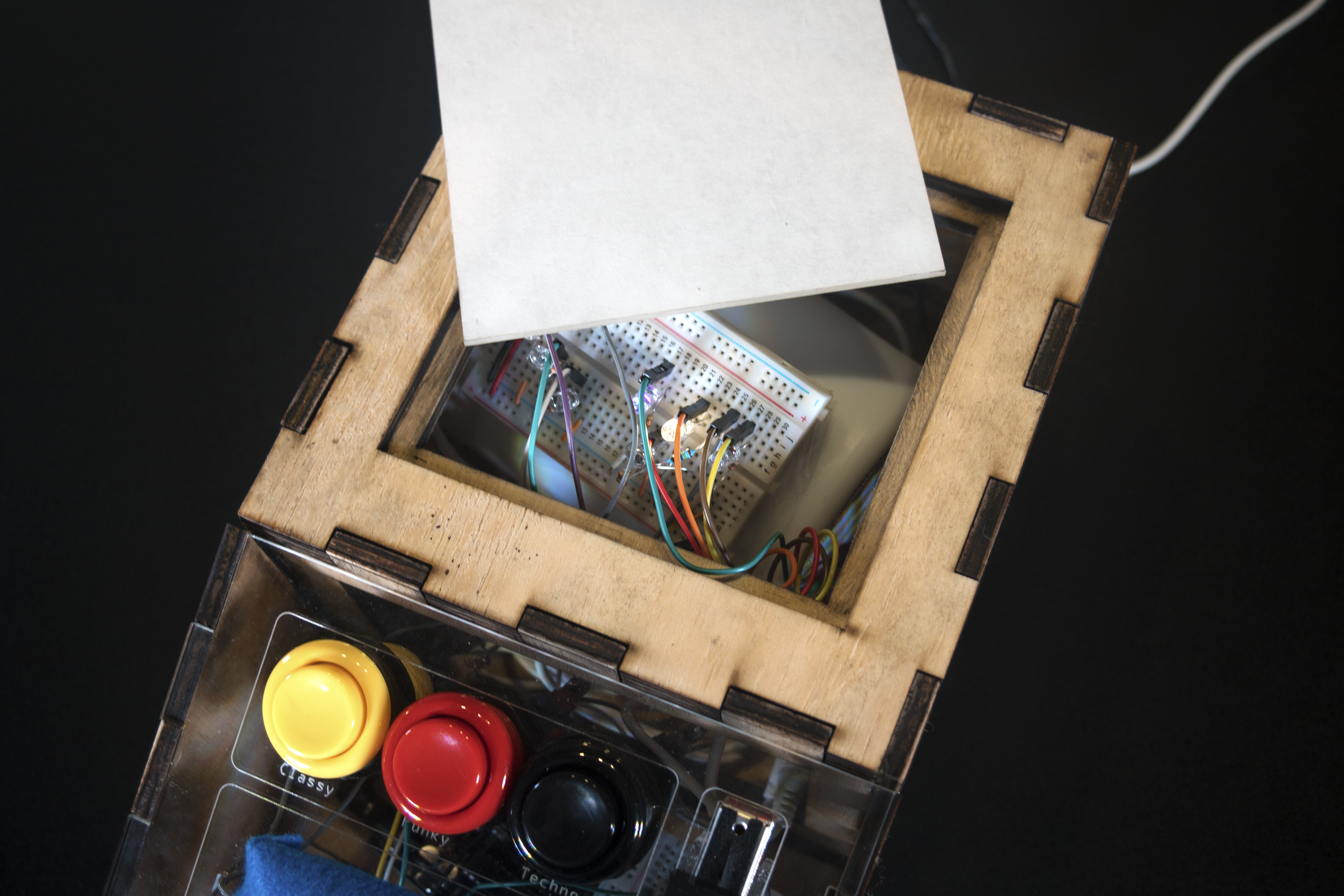

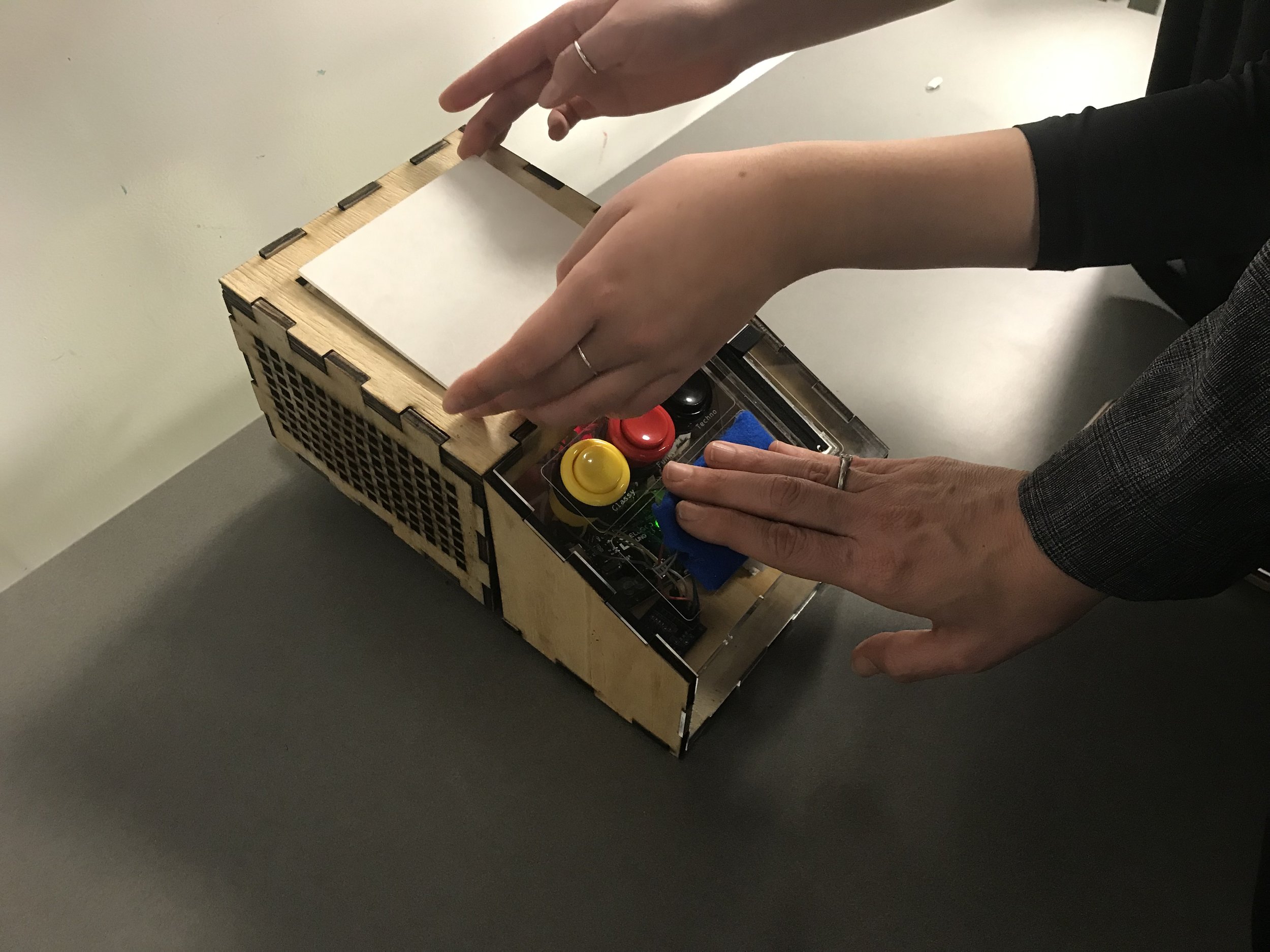

A clear, removable front casing and removable top panel encourage exploration and discovery by exposing the inner workings of the entire machine.

background

problem

Listening to music is a deeply engaging and immersive experience. People interact with music on a spectrum of engagement - from passively listening to playlists on the go, to dancing & singing along at concerts. In universal episodes like these, the ears are associated as the prominent sense, but people often stray from recognizing how involved our other senses are in curating musical experiences.

Traditionally multisensory ways of experiencing music, such as concerts that incorporate light shows along with performers, the feeling of the bass through large speakers, and the shared experience with people around them, can be inaccessible to people with sensory impairment or physical disability.

goal

How might we reframe the experience of “listening” to music in a multisensory, accessible way?

development

ideation

Our vision for MusicBox stemmed from our shared interest in manipulating the experience of listening to music, and challenging how people define such experiences.

We reflected upon our own relationships with music to gain insight on how music has been visualized for people and narrowed it down to three different performative states:

Lighting at concerts and clubs

Sampling recorded music, played and altered by DJs

Booming volume levels of speakers and music editing software

Conceptually, we hoped to take elements from these observations and contextualize them into a more tangible experience through the incorporation of light, color, and haptics.

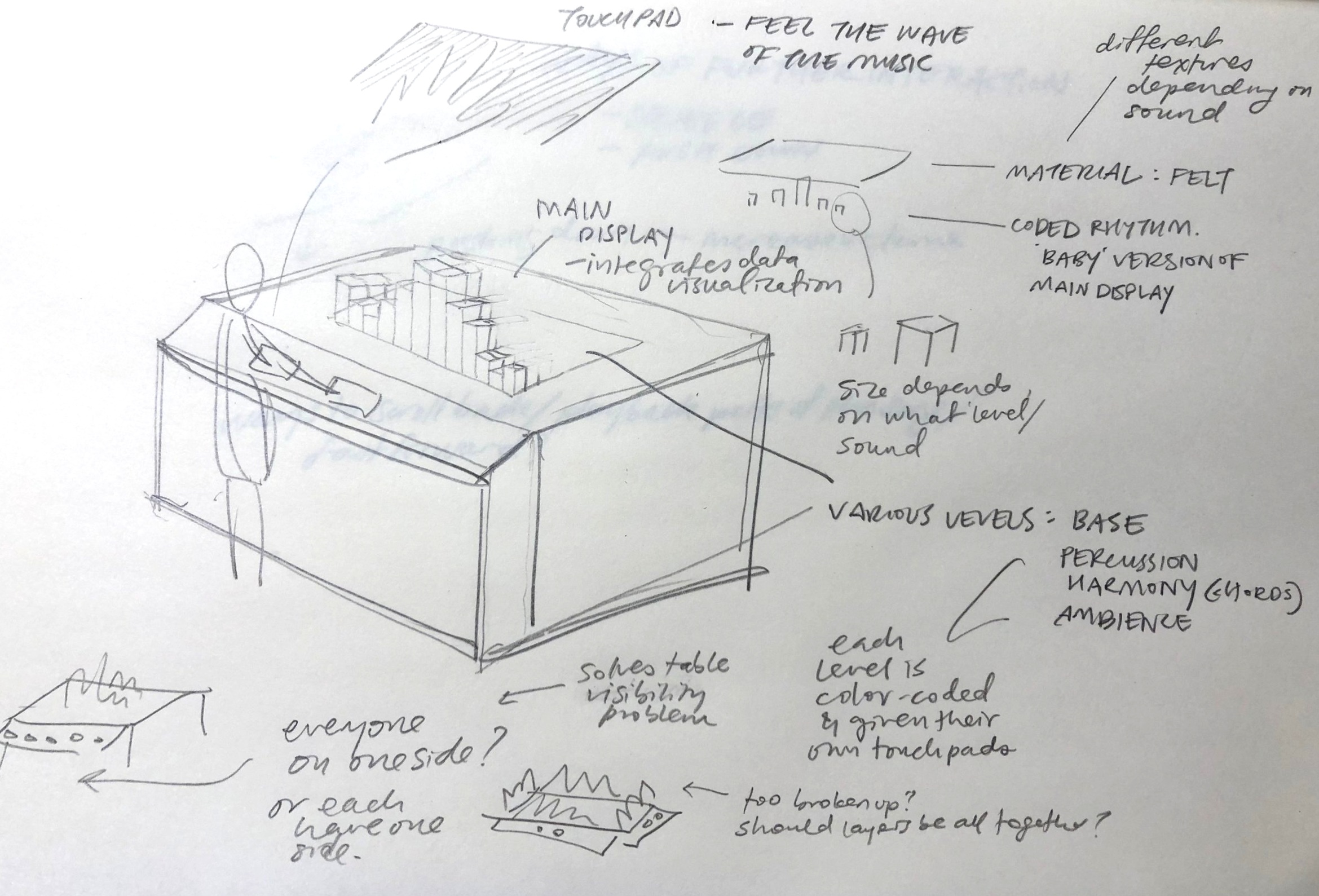

Initial ideas included a physically moving waveform in the center of a table-sized interface, a dynamic graphic that represented different layers of the music, and multi-user control over a song through physical movements like pushing and manipulating textured panels. We were very intrigued by the idea of being able to physically feel music under the palm of your hand.

conceptual design

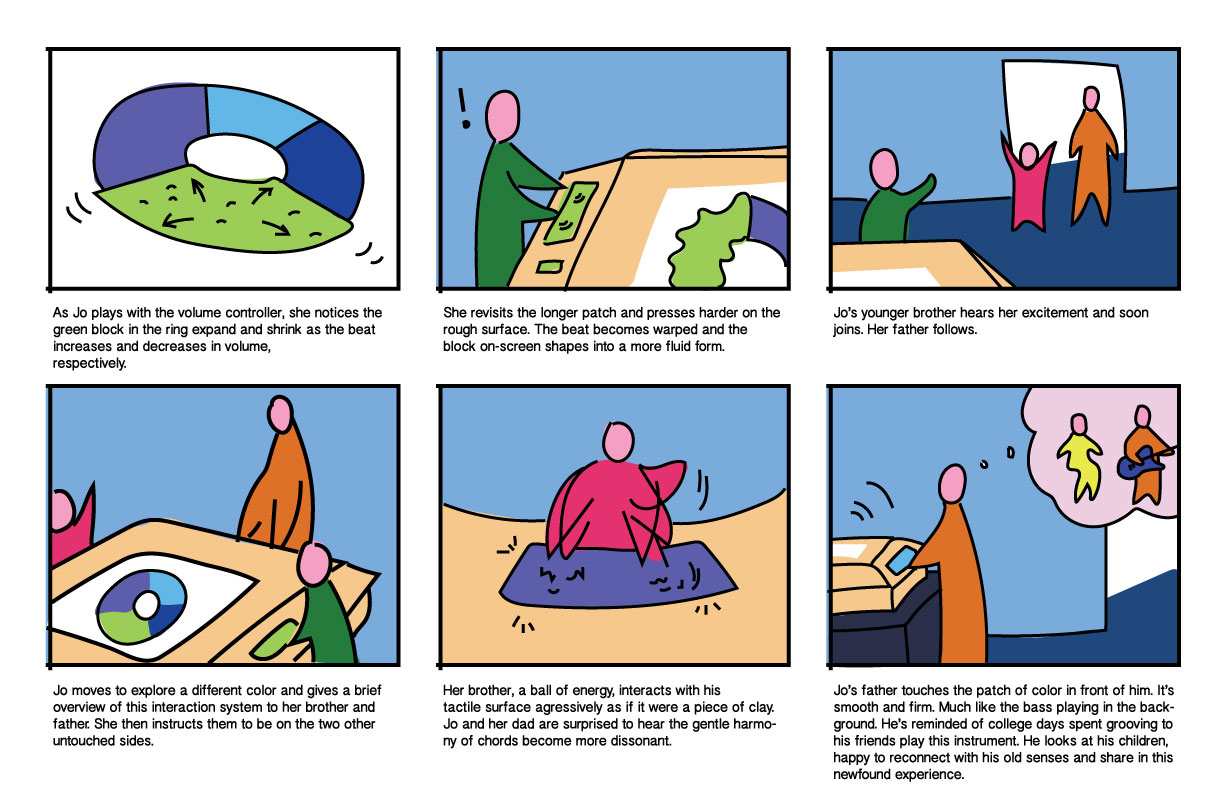

Our first iteration eventually settled on a table-sized wooden structure, with a dynamic graphic in the middle representing different ‘layers’ of a song, such as melody, harmony, bass, and beat. These layers would all be able to be experience both visually through the graphic and tactically under the palm of the hand through physical waveform panels.

Each panel could be manipulated by a user, who could see how their actions (such as pushing on the panel) could alter the sound by warping it, either by hearing it or by seeing it change on the graphic and physically feeling it under their hand. The multi-user approach would allow for mutual discovery and collaboration.

To demonstrate our concept, Soomin created a storyboard of two children and their father experimenting with the installation at a museum.

design revisions

After some initial feedback during a project update from fellow classmates and our professor, we refined our concept further and updated some aspects based on both feasibility and wanting to encourage more of a personal element of discovery rather than a multi-person interaction.

prototyping

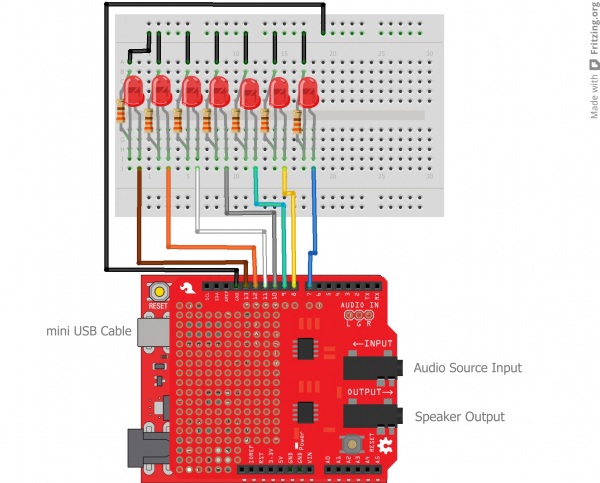

We decided to implement this project using the Arduino Uno board and the Arduino IDE on the recommendation of our professor.

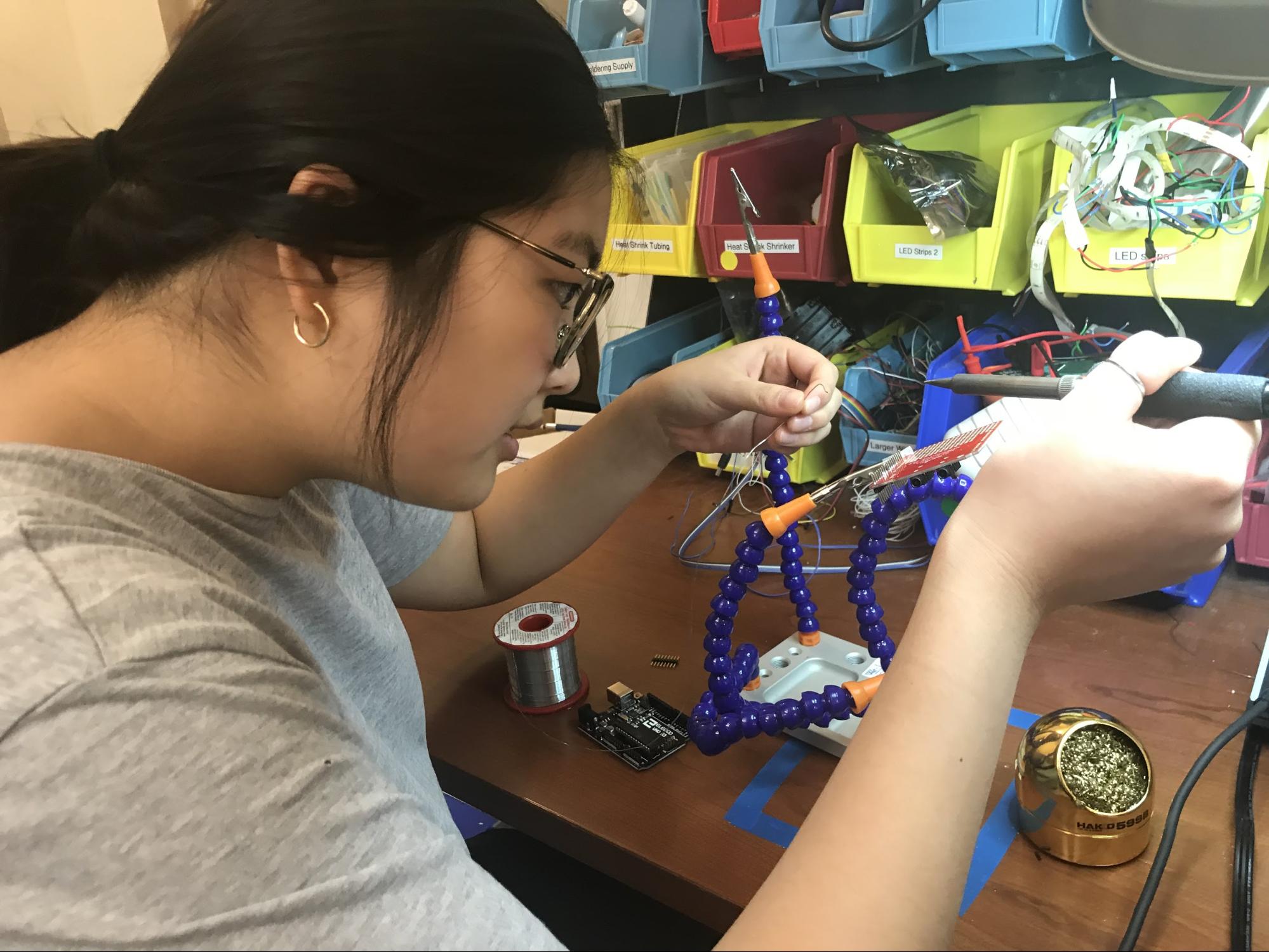

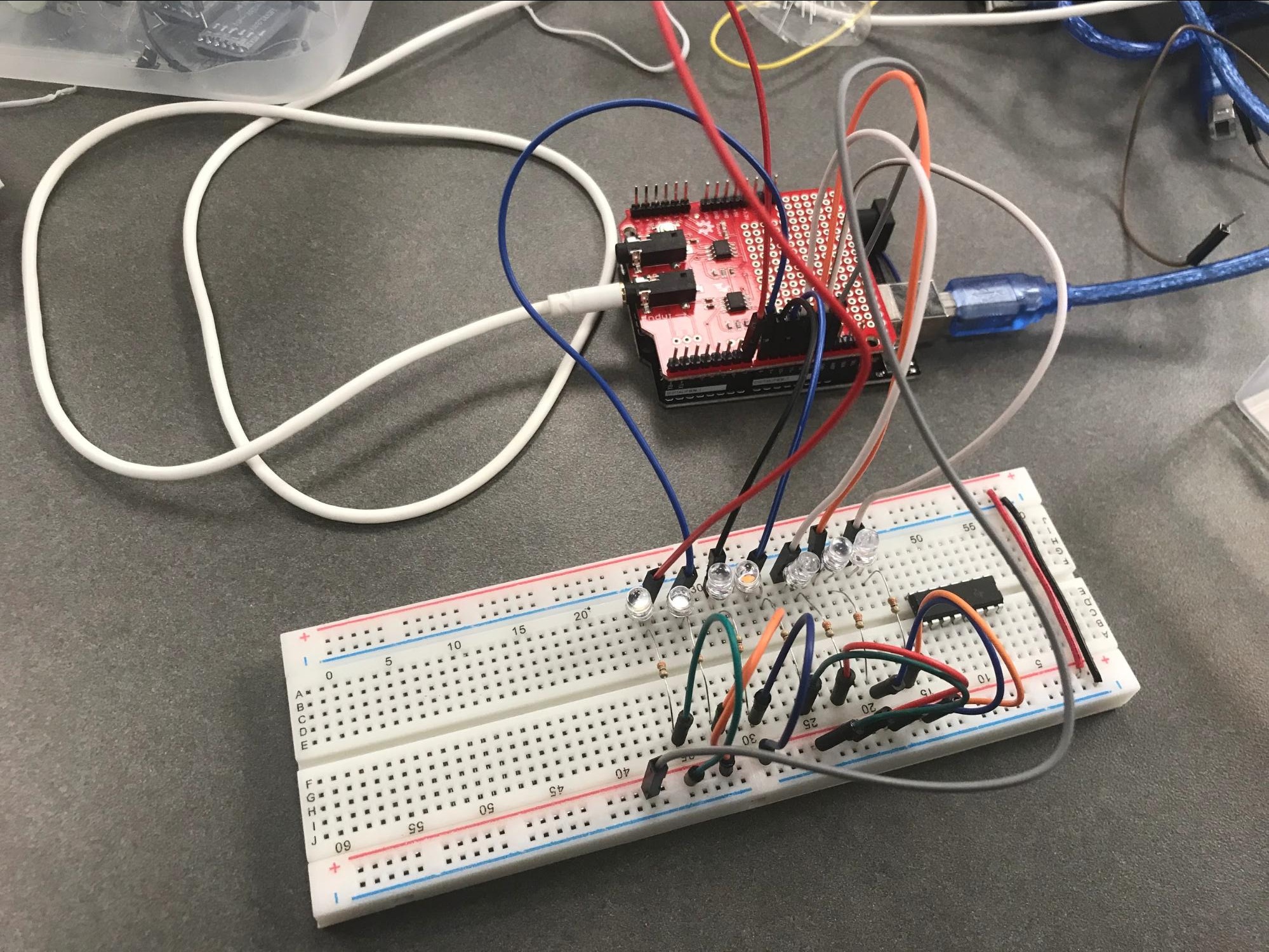

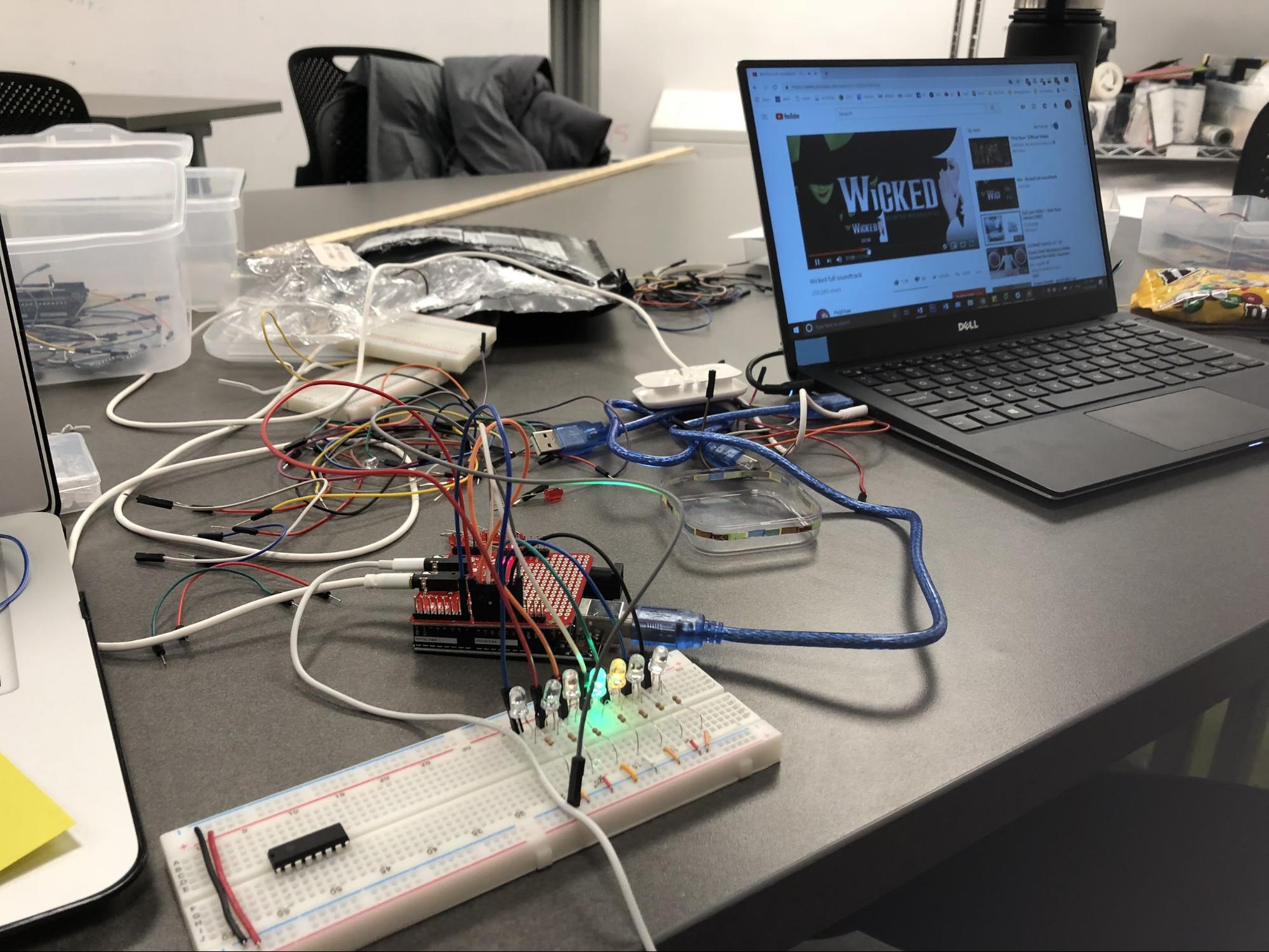

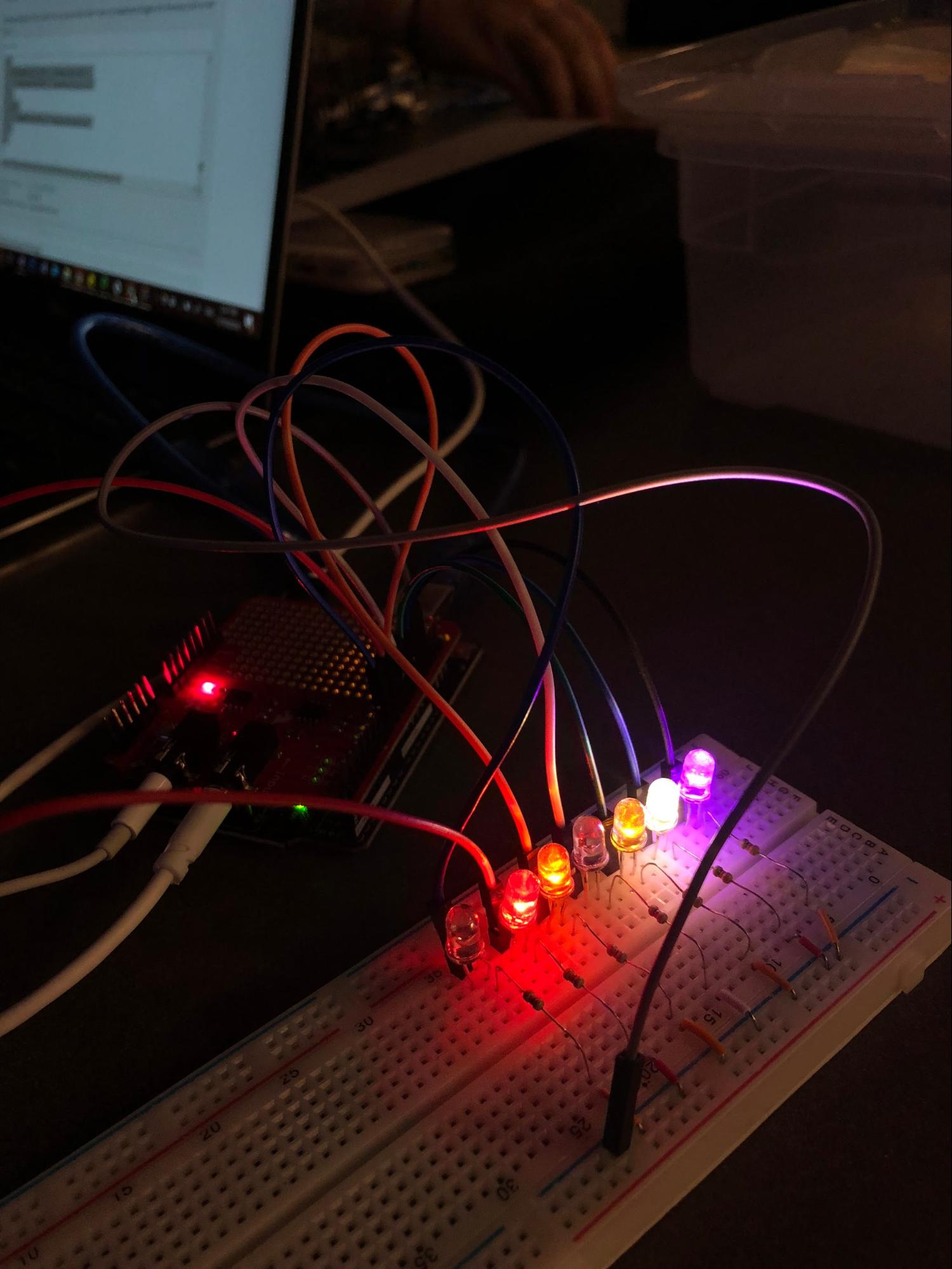

After researching more about audio input, we decided to work with the Sparkfun Spectrum Shield. It is capable of splitting stereo input into 7 bands per channel, which is what our project needed for its center display of LED lights dancing to the music. Once we gathered our materials, we soldered the stackable headers onto the Spectrum shield and began programming the LED lights to dance along with the music.

physical casing and construction

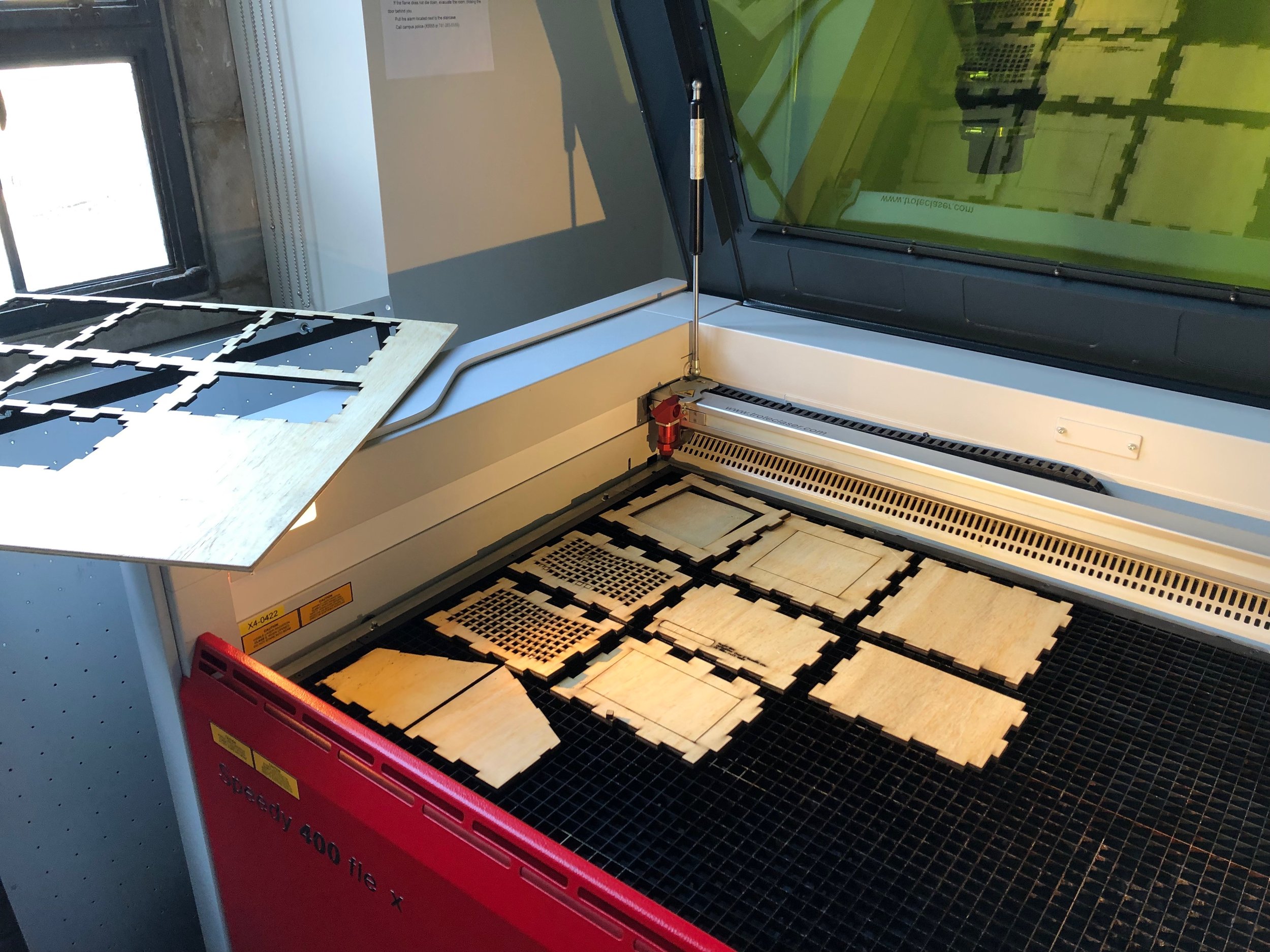

Soomin was responsible for designing the physical casing of MusicBox, designing all of the shapes for the sides and panels using Adobe Illustrator. We cut and engraved all of the pieces with a Trotec laser cutter before gluing them together.

For our project’s physical casing, we drew inspiration from the nostalgia of antique music boxes, but also incorporated physical aspects of speakers into our design, resulting in the wavy checkered pattern, which would also allow sound to travel more freely.

We wanted to allow transparency to encourage learning and discovering into MusicBox’s inner workings, so we used acrylic for the center display and side control panel. We also designed the front panel to be attached by velcro for easy removal, and the top easily removes as well.

wiring

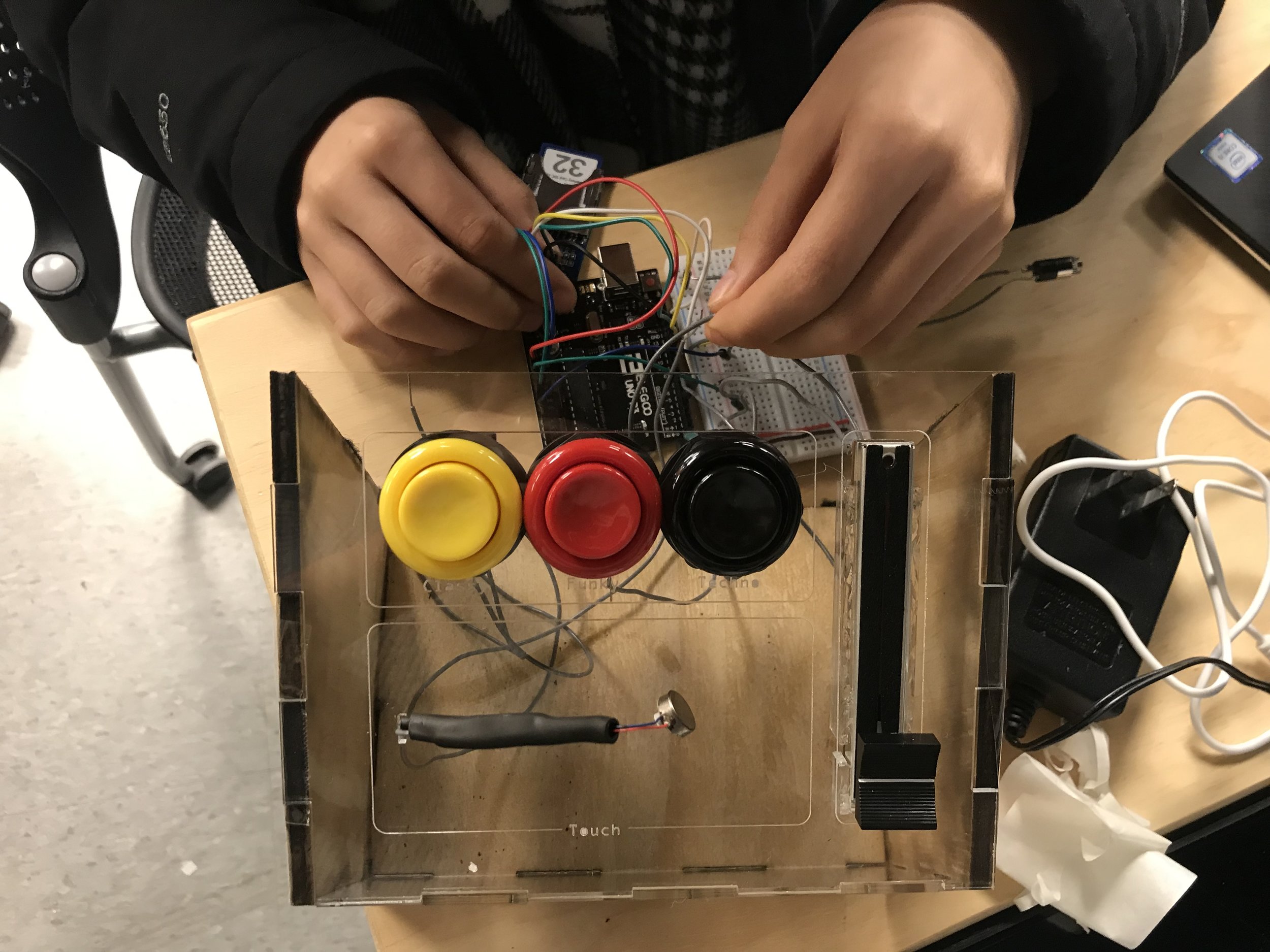

My primary responsibility was the inner wiring of the project, along with the programming of all necessary components of the input (slider and buttons) and the outputs (lights and vibration motor).

Three instrumental music files (‘funky’, ‘techno’, and ‘classical’) were uploaded onto an SD Card module, which fed audio input out of in the form of .wav files from a pin on an Arduino Uno board to an audio jack. From there, a 3.5mm stereo jack runs this input into our other Arduino Uno board, which is fitted with a Sparkfun Audio Spectrum Shield. Our LED lights and vibration motor read these frequencies and react accordingly to the music.

We also incorporated sliders and buttons as input methods. The slider alters the volume and the buttons change which music file plays. They are attached to the same Arduino as the SD card module. The Arduino code reads the button state as digital input, and triggers the correct song. It reads the position of the volume slider as analog input, and changes the volume accordingly to the volume() function we built into the code.

other deliverables

To accompany our prototype and our presentation in the final demonstration, we also designed a poster and video that summarized our ideation process, implementation, and rationale.

conclusion and reflections

At the open house, we presented our concept and poster to attendees in a presentation, as well as demonstrating our prototype. My favorite part was when people actually got to try out the thing I had been working on the entire semester, and seeing their surprise and delight at feeling what they were seeing and hearing - exactly the goal that we wanted to achieve. I was very pleased that we were able to communicate the concept.

I thought it was incredibly rewarding to be able to work on a physical object - all of my previous experiences have only been digital, so it was really exciting to consider all of the new possibilities as we bring information and data back into the physical world through advanced technology and tangible user interfaces. I also learned valuable physical fabrication skills, like working with Arduinos and electrical circuits. Although at times incredibly frustrating, sometimes to the point of tears, I’m proud of the result.

A future iteration of MusicBox would be cool if users could upload their own music to experience it in a multisensory way. This application would be fun for kids, or those who are hearing impaired.